Strategies for Reliable Schema Migrations

Adapted from a talk given at Kube Native 2024:

Introduction

Database schema migrations are a critical part of the software development lifecycle. They allow us to evolve our data model as our application grows and changes. However, migrations can also be a significant source of risk and downtime if not handled carefully.

Despite teams applying all the common best practices, such as using a migration tool to automate changes and carefully examining each migration before approving it during code review, issues still slip through the cracks, making migrations a source of significant headaches for many engineering teams.

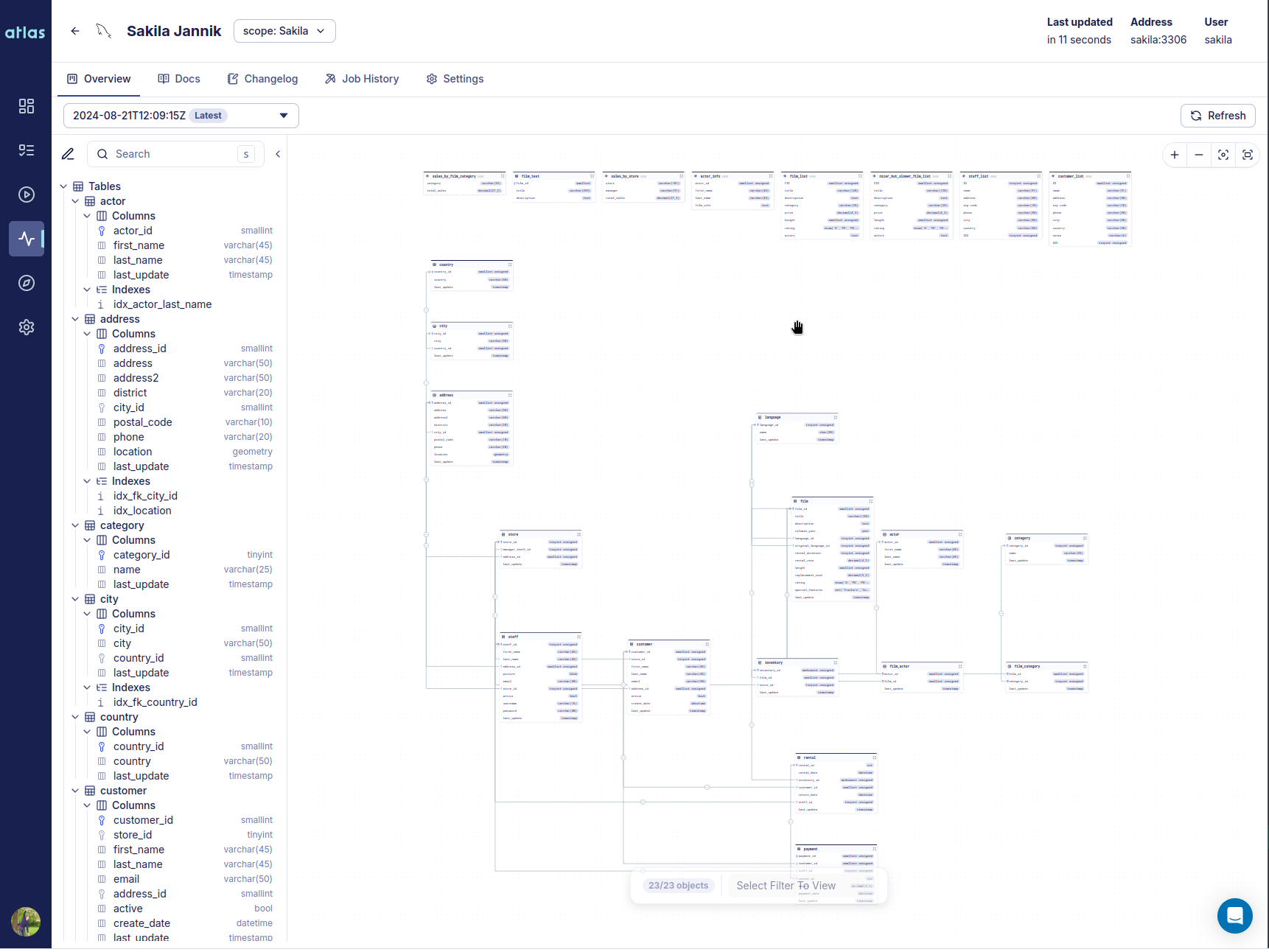

This post explores five strategies for making database schema migrations more reliable by introducing Atlas, a database schema-as-code tool that helps teams make their database schema changes both safer and easier to manage.