Announcing Atlas v1.0: A Milestone in Database Schema Management

We're excited to announce Atlas v1.0 - just in time for the holidays! 🎄

v1.0 is a milestone release. Atlas has been production-ready for a few years now, running at some of the top companies in the industry, and reaching 1.0 is our commitment to long-term stability and compatibility. It reflects what Atlas has become: a schema management product built for real production use that both platform engineers and developers love.

Here's what's in this release:

- Monitoring as Code - Configure Atlas monitoring with HCL, including RDS discovery and cross-account support.

- Schema Statistics - Size breakdowns, largest tables/indexes, fastest-growing objects, and growth trends over time.

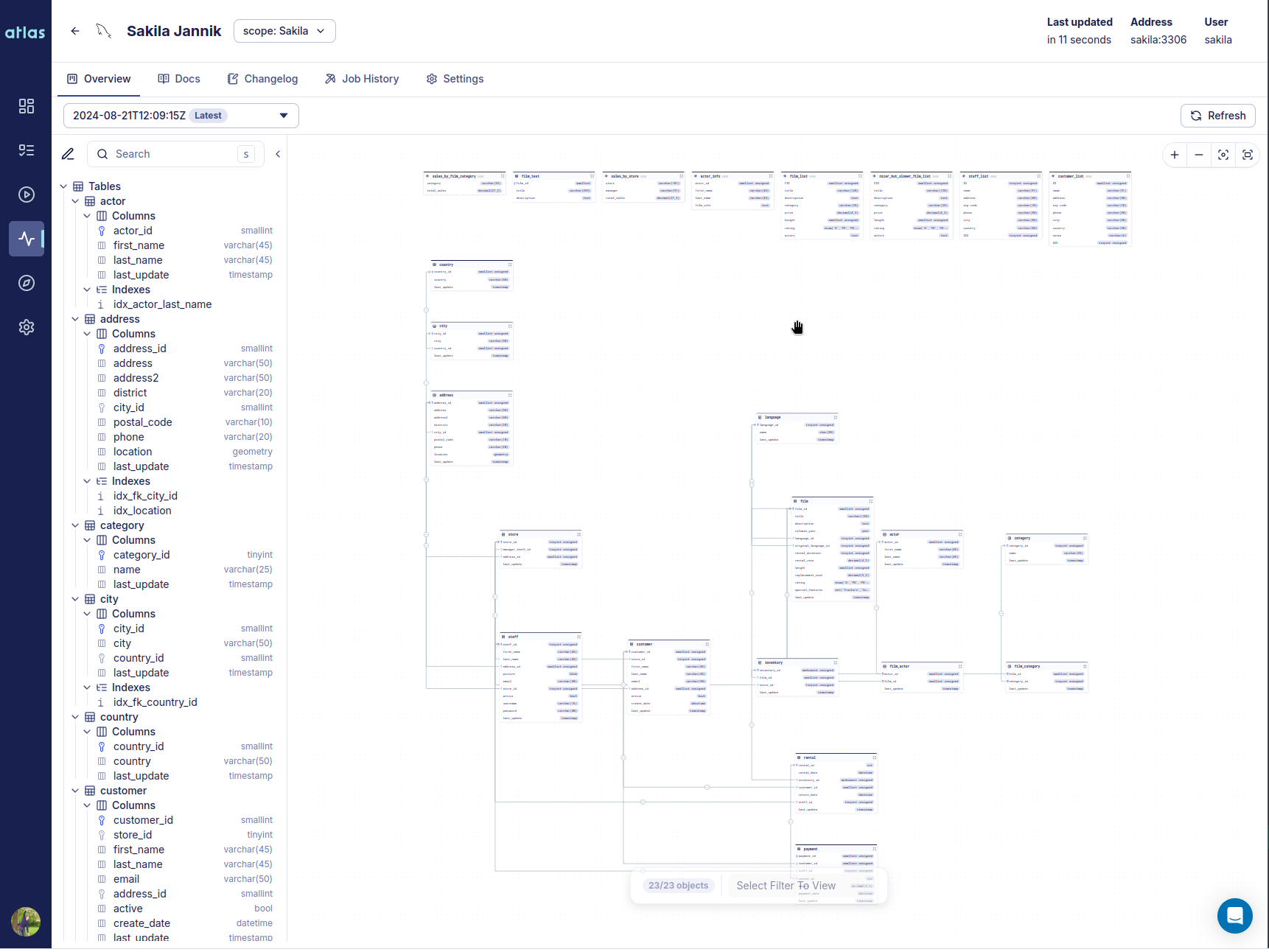

- Declarative Migrations UI - A new dashboard for databases, migrations, deployments, and status visibility.

- Database Drivers - Databricks, Snowflake, and Oracle graduate from beta to stable; plus improvements across Postgres, MySQL, Spanner, Redshift, and ClickHouse.

- Deployment Rollout Strategies - Staged rollouts (canaries, parallelism, and error handling) for multi-tenant and fleet deployments.

- Deployment Traces - End-to-end traceability for how changes move through environments.

- Multi-Config Files - Layer config files with

-c file://base.hcl,file://app.hcl.