Deep Dive into Declarative Migrations

Prepared for an Atlas Community Webinar, October 2024

Introduction

In recent years, the shift to declarative resource management has transformed modern infrastructure practices. Groundbreaking projects like Terraform, for infrastructure as code, and Kubernetes, for container orchestration, have exemplified the power of this approach. By focusing on what the end state should be rather than how to achieve it, declarative methods make systems more scalable, predictable, and easier to maintain—essential qualities for handling today's complex environments.

However, when it comes to managing database schemas, the industry has been slow to adopt declarative workflows. Atlas was created almost four years ago to address this gap.

Atlas supports two kinds of database schema migration workflows:

-

Versioned Migrations - each change to the database is described as a migration script, essentially a SQL file containing the SQL commands to apply the change. Migrations are versioned and applied in order.

Contrary to most existing migration tools, Atlas relies on users defining the desired state of the database schema in code Atlas generates the necessary migration scripts to bring the database to the desired state.

-

Declarative Migrations - the database schema is described in a declarative way, and changes are applied by comparing the desired schema with the current schema and generating the necessary SQL commands to bring the database to the desired state.

To date, most teams that used Atlas in production have used it's versioned migration workflow which synthesizes the simplicity and explicitness of classic migration tools with the benefit of automated migration generation.

Recent improvements to Atlas have addressed many of the challenges and concerns teams have expressed around using declarative migrations in production in the past. In this post, we'll take a deep dive into the declarative migration workflow

Declarative Migrations in Production?

Declarative migrations are a powerful concept, and it may surprise you, but, they are not new. In previous incarnations, they were often referred to as "state based" migrations, but they were never regarded as a production-grade solution. A quick look around the documentation of popular tools will reveal that they are often discouraged:

Hibernate ORM:

Although the automatic schema generation is very useful for testing and prototyping purposes, in a production environment, it’s much more flexible to manage the schema using incremental migration scripts.

EF Core Docs:

EnsureCreatedwill create the database if it doesn't exist and initialize the database schema. If any tables exist (including tables for another DbContext class), the schema won't be initialized.

Liquibase:

State-based deployments offer a quick, simplistic way to determine change scripts and are useful for analysis and drift detection. Due to its simplicity, though, it’s unreliable and risky to use as a primary change management approach.

Challenges of Declarative Migrations

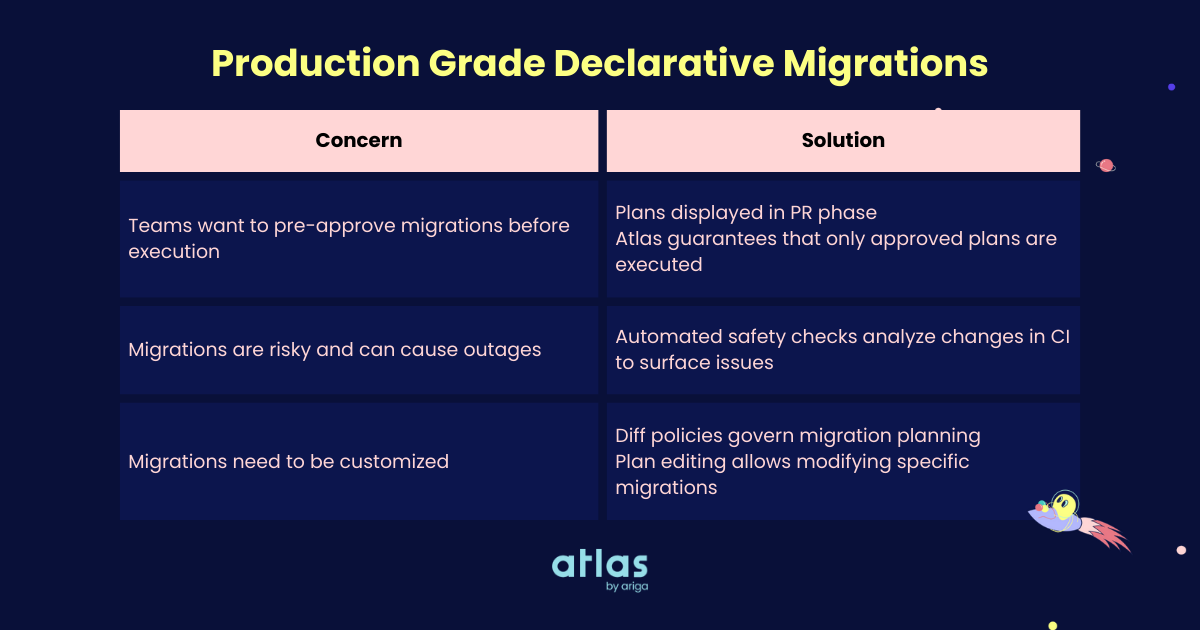

Having interviewed many engineers and architects about their experience with schema management, we've identified several concerns that teams have with declarative migrations:

Approval Process

Versioned migrations serve a dual purpose, they are both an explicit set of instructions for the migration tool and a source-controlled artifact that can be examined and approved by human reviewers.

For many teams, the idea of only versioning the desired state of the database schema and not the actual migration scripts means forfeiting their main mechanism for review and approval of schema changes. This is a valid concern, and any production-grade declarative migration tool should provide a way to review and approve changes before they are applied.

Safety

Schema changes are a risky business, if you are not careful you can easily find yourself in a situation where you've accidentally deleted data, locked a table for writes for a long period of time or introduced a production outage via a breaking schema change.

By definition, migrations generated by declarative workflows are non-deterministic, as it depend as they depend on the current state of the database. This also one of their main advantages, as they can handle unexpected circumstances and reconcile drift automatically. However, many teams are uncomfortable with the idea of not knowing exactly what will be applied to their database.

As one user once wrote us on HackerNews:

I'll never be comfortable with any tool for that automatically generates schema changes, as I'm just never sure at what point it decides to delete parts of my prod db.

Customization

Another common concern is the lack of control over the generated migrations. Migration planning can be thought of as a navigation problem, how to get from point A to point B. Declarative migrations are like using a GPS, you tell it where you want to go, and it figures out the best route. But what if you want to travel through some specific coordinates?

When it comes to database schema management, there may be multiple ways to get from the current to the desired state,

some of them completely undesirable. For instance, a simple column rename can also be achieved by dropping the column

and creating a new one (thereby losing all the data in the column) or with PostgreSQL index creation may lock a table

if not used with the CONCURRENTLY option.

Tools like Kubernetes and Terraform don't let the user specify the exact steps to get to the desired state, or require the development of custom plugins (such as Terraform Providers or Kubernetes Controllers) to achieve this. For database schema changes, this level of customization is often necessary.

Production-grade Declarative Migrations

Atlas was designed with these concerns in mind and in the next section, we'll explore how Atlas addresses these concerns to provide teams with a production-grade declarative migration workflow.

Pre-planning Migrations

To address users' need for knowing the exact migrations that will be applied to their database, Atlas provides the

schema plan command. Users may run a command similar to:

atlas schema plan --env local --save

Atlas will calculate the plan, analyze it and present the user with a detailed report of the migrations that will be applied and their potential impact on the database:

Planning migration from local database to file://./schema.pg.hcl (1 statement in total):

-- create "t1" table:

-> CREATE TABLE "t1" (

"c1" text NOT NULL

);

-------------------------------------------

Analyzing planned statements (1 in total):

-- no diagnostics found

-------------------------

-- 19.389709ms

-- 1 schema change

? Approve or abort the plan:

▸ Approve and save

Abort

After saving, a "plan file" file is created:

plan "20241029120559" {

from = "PKrr2qiovsNurI70kgT+AW3rInDu74E1PSOSHgh8CrA="

to = "Szdpl/ADvG8kKrXBERSxjvU/I1nprpBejpPX7fLoWmc="

migration = <<-SQL

-- Create "t1" table

CREATE TABLE "t1" (

"c1" text NOT NULL

);

SQL

}

The plan block contains three relevant fields:

from- A "fingerprint" hash which encodes the "current" state of the database.to- A similar hash for the "desired" state of the database.migration- The SQL commands that will be applied to the database to bring it from thefromstate to thetostate.

The from and to fields are significant because they allow Atlas to guarantee that the plan will only be executed

if the database is in the known from state. This is a crucial safety feature that enables the deterministic execution

of the plan.

The plan file can then be applied to the database using the schema apply command:

atlas schema apply --env local --plan file://20241029120559.plan.hcl

Customization

As we previously mentioned, there are often multiple ways to get from the current to the desired state of the database. To provide users with the ability to customize the generated migrations, Atlas provides two ways to control the process.

Diff Policies

Diff policies can be defined on the project level to control how Atlas generates migrations. For instance, a project may define a policy that indexes must be created or dropped concurrently:

env "local" {

diff {

// By default, indexes are not added or dropped concurrently.

concurrent_index {

add = true

drop = true

}

}

}

In some cases, users may want to tell Atlas to skip DROP operations completely:

env "local" {

diff {

skip {

drop_schema = true

drop_table = true

}

}

}

Editing Plans

In some cases, users may want to edit the generated plan before applying it. By using the --edit flag on the

schema plan command, Atlas will open the plan in the user's default editor for review and modification:

When the user runs:

atlas schema plan --env local --edit --save

Atlas will calculate the plan, and open it in the user's default editor:

-- Create "t1" table

CREATE TABLE "t1" (

"c1" text NOT NULL

);

The user may edit the plan as needed, adding or removing statements, and then save the plan.

-- Create "t1" table

CREATE TABLE "t1" (

"c1" text NOT NULL

);

+ INSERT INTO "t1" ("c1") VALUES ('hello');

Atlas verifies that the edited plan is still valid and that the resulting schema is equivalent to the desired schema.

Suppose a user makes a change that is not in line with the desired schema, for instance, adding a column that is not in the desired schema:

-- Create "t1" table

CREATE TABLE "t1" (

"c1" text NOT NULL,

+ "c2" text NOT NULL

);

Atlas will detect the drift and present the user with an error message:

Abort: the planned state does not match the desired state after applying the file:

--- planned state

+++ desired state

@@ -1,3 +1,4 @@

CREATE TABLE "t1" (

- "c1" text NOT NULL

+ "c1" text NOT NULL,

+ "c2" text NOT NULL

);

Safety Checks

In addition to its advanced diffing capabilities, Atlas contains a migration analysis engine that can simulate changes

and detect potential issues before they occur. Until recently, this engine was only available to users of the versioned

migration workflow via the migrate lint command. However, in the latest release, we've included the analysis step

in the schema plan and schema apply commands for declarative migrations.

When a user runs the schema plan command, Atlas will analyze the plan and present the user with a detailed report of

the potential impact of the migrations.

For instance, if a user tries to drop a column or table, Atlas will present a warning:

Planning migration from local database to file://./schema.pg.hcl (1 statement in total):

-- drop "t1" table:

-> DROP TABLE "t1";

-------------------------------------------

Analyzing planned statements (1 in total):

-- destructive changes detected:

-- L2: Dropping table "t1"

https://atlasgo.io/lint/analyzers#DS102

-- suggested fix:

-> Add a pre-migration check to ensure table "t1" is empty before dropping it

-------------------------

-- 16.281417ms

-- 1 schema change

-- 1 diagnostic

? Approve or abort the plan:

▸ Approve and save

Abort

For a full list of diagnostics and suggested fixes, users can visit the Atlas Lint Analyzers page.

Modern CI/CD Workflows with Declarative Migrations

Atlas was designed to be used in modern CI/CD workflows, and the declarative migration workflow is no exception. In the final section of this post, we'll explore how teams can integrate Atlas into their CI/CD pipelines to ensure that:

- All changes to the database schema result from changes to the desired state.

- Changes are planned automatically during the CI process.

- Changes are analyzed automatically to detect potential issues.

- Changes are approved by the team before being applied to the database.

- Changes are applied to the database in a deterministic and safe manner.

Demo: CI/CD Workflow with Atlas

Here's a quick demo video of how to integrate Atlas into a CI/CD pipeline using GitHub Actions:

.

To summarize, our demo CI/CD workflow works as follows:

- The user changes the desired state of the database, in our case a file named

schema.sql. - The user commits the changes to the

schema.sqlfile and creates a pull request. - GitHub Actions runs the

atlas schema plancommand to calculate the migration plan. - The Action creates a comment on the pull request with the following information:

- The migration plan.

- An analysis of the changes, including any potential issues.

- Instructions for manually editing the plan if needed.

- The user reviews the plan and approves the PR.

- Once the PR is merged, GitHub Actions run another action that pushes the new state and plan to the Schema Registry.

- Once the desired state and plan are in the Schema Registry, the user can deploy the changes to the database using the

atlas schema applycommand.

A word about the Schema Registry

The Schema Registry is a fairly new concept introduced by Atlas. The registry is a central location where schemas and migration plans can be stored and shared among team members. You can think about it as a "DockerHub for Schema Management" or an "Artifact Repository for Database Schemas".

As we saw, it is possible to simply use files to store the desired state and migration plans, but the Schema Registry provides several advantages:

- Source of Truth - the registry can be used as the source of truth for the database schema, ensuring that all team members are working with the same schema and that all changes are tracked and go through a structured review process. Similar to how teams use DockerHub to store and share container images, making sure that only code that went through a team's CI/CD pipeline makes it's way to production.

- Streamlined Deployment - as we will see below, the registry isn't a static blob storage, but a dynamic service that can be queried and interacted with. This allows the Atlas CLI to only fetch relevant and approved migration plans, during deployment, enforcing the deterministic and safe execution of the migrations.

- Visualizations and Insights - the registry can be used to generate visualizations of the schema, track changes over time, and provide insights into the state of the database. This can be useful for auditing, debugging, and planning future changes.

How everything fits together

As we saw in the demo, the Schema Registry is a central component of the CI/CD workflow. It acts as the source of truth for the database schema and migration plans, ensuring that all changes are tracked and reviewed before being applied to the database.

Here's what happens when a user runs the atlas schema apply command:

- Atlas inspects the target database and fetches the current state of the schema. Atlas assigns a "fingerprint hash".

- Atlas analyzes the desired state of the database and calculates the hash for that state.

- Atlas uses this tuple of (from, to) hashes to search the Schema Registry for a matching migration plan.

- If a plan is found, Atlas verifies that it is approved and safe to apply.

- If the plan is approved, Atlas applies the plan to the database.

What happens if an approved plan is not found?

By default, Atlas will not apply any changes to the database if an approved plan is not found. This is a safety feature that ensures that only changes that have been reviewed and approved by the team are applied to the database.

However, in some cases, users may want to apply changes to the database that have not been approved. After all, most schema changes are simple and additive and don't pose any risk to the database. Additionally, some environments are mostly used for testing and development and don't require the same level of scrutiny as production.

In cases where no approved plan is found, Atlas will calculate the plan and run it through analysis ("linting").

Atlas provides a mechanism called "Review Policies" to define what should happen based on the linting results.

Review policies are defined in the project configuration file using the lint.review attribute:

lint {

review = ERROR // ERROR | ALWAYS

}

The review policy can be set to one of the following values:

ERROR- Atlas will require manual review and approval only if the linting report contains errors, i.e., one of the analyzers is configured to return an error. See the destructive-changes analyzer as an example.WARNING- Atlas will require manual review and approval if the linting report contains warnings (diagnostics) or errors. See the list of checks that can be detected by the analyzers.ALWAYS(default) - Atlas will always require manual review and approval, regardless of the linting report.

Wrapping Up

In this post, we've taken a deep dive into the declarative migration workflow in Atlas. Here's what we covered:

- Until recently, declarative migrations were considered a risky and unreliable way to manage database schemas.

- The top challenges teams have with declarative migrations are around approval, safety, and customization.

- Atlas addresses these concerns by providing a way to review and approve changes, analyze and simulate changes, and customize the generated migrations.

- Atlas can be integrated into modern CI/CD workflows to ensure that all changes to the database schema are tracked, reviewed, and applied in a deterministic and safe manner.

- The Schema Registry is a central component of the CI/CD workflow, acting as the source of truth for the database schema and migration plans.

- Atlas provides a mechanism called "Review Policies" to define what should happen based on the linting results.

We hope this post has given you a better understanding of how Atlas can be used to manage database schemas in a declarative way. If you have any questions or would like to learn more, please don't hesitate to reach out to us on our Discord server.