Schema monitoring for ClickHouse using Atlas

Automatic ER Diagrams and Docs for ClickHouse

When working with a relational database like ClickHouse, understanding the database schema becomes essential for many functions in the organization. Who cares about the schema? Almost everyone who interacts with your data:

- Software engineers and architects use knowledge about the schema to make design decisions when building software.

- Data engineers need to have an accurate understanding of schemas to build correct and efficient data pipelines.

- Data analysts rely on familiarity with the schema to write accurate queries and derive meaningful insights.

- DevOps, SREs, and Production Engineers use schema information (especially recent changes to it) to triage database-related production issues.

Having clear, centralized documentation of your database's schema and its changes can be a valuable asset to foster efficient work and collaboration. Knowing this, many teams have developed some form of strategy to provide this kind of documentation:

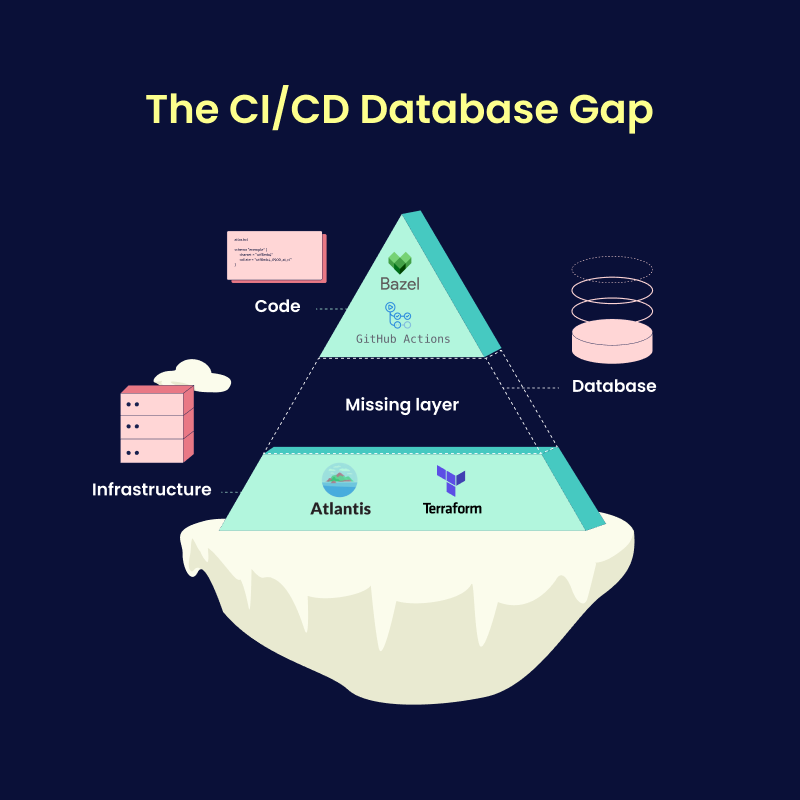

- Diagramming tools. Teams use generic diagramming tools like Miro or Draw.io to maintain ER (Entity-Relation) Diagrams representing their database schema. While this is easy to set up, it requires manually updating the documents whenever something changes, often causing documents to go stale and become obsolete.

- Data modeling tools. Alternatively, teams use database modeling software like DataGrip or DBeaver. While these tools automatically inspect your database, understand its schema, and provide interactive diagrams, they have two main downsides: 1) Since they run locally, they require a direct connection and credentials introducing a potential security risk; 2) They do not enable any collaboration, discussion, or sharing of information.

- Enterprise Data Catalogs like Atlan or Alation, provide extensive schema documentation and monitoring; however, they can be quite pricey and difficult to set up.

Enter: Atlas Schema Monitoring

Atlas offers an automated, secure, and cost-effective solution for monitoring and documenting your ClickHouse schema.

With Atlas, you can:

- Generate ER Diagrams: Visualize your database schema with up-to-date, easy-to-read diagrams.

- Create Searchable Code Docs: Enable your team to quickly find schema details and usage examples.

- Track Schema Changes: Keep a detailed changelog to understand what's changed and why.

- Receive Alerts: Get notified about unexpected or breaking changes to your schema.

All without granting everyone on your team direct access to your production database.