Reporting schema migrations to Atlas Cloud

Deploying without Atlas Cloud

A common way to deploy migrations using Atlas (or any other migration tool) is similar to this:

- When changes are merged to the

mainbranch, a CI/CD pipeline is triggered. - The pipeline builds an artifact (usually a Docker image) that includes the migration directory content and Atlas itself.

- The artifact is pushed to a registry.

- The deployment process is configured to use this newly created image to run the migrations against the production database.

This process is a common practice, but it requires setting up a CI/CD pipeline (including storage, permissions, and other glue) for each service, adding another layer of complexity.

Why deploy from Atlas Cloud?

Atlas Cloud streamlines deploying migrations by providing a single place to manage migrations for all your services. After connecting your migration directory to Atlas Cloud, it is automatically synced to a central location on every commit to your main branch. Once this setup (which takes less than one minute) is complete, you can deploy migrations from Atlas Cloud to any environment with a single command (or using popular CD tools such as Kubernetes and Terraform).

Report Migrations to Atlas Cloud

To read the migration directory from the Atlas Registry,

use the atlas:// scheme in the migration URL as follows:

env {

// Set environment name dynamically based on --env value.

name = atlas.env

migration {

// In this example, the directory is named "myapp".

dir = "atlas://myapp"

}

}

Now you can execute schema migrations and report their result to Atlas Cloud using the following command:

export ATLAS_TOKEN="<ATLAS_TOKEN>"

atlas migrate apply \

--url "<DATABASE_URL>" \

--config file://path/to/atlas.hcl \

--env prod

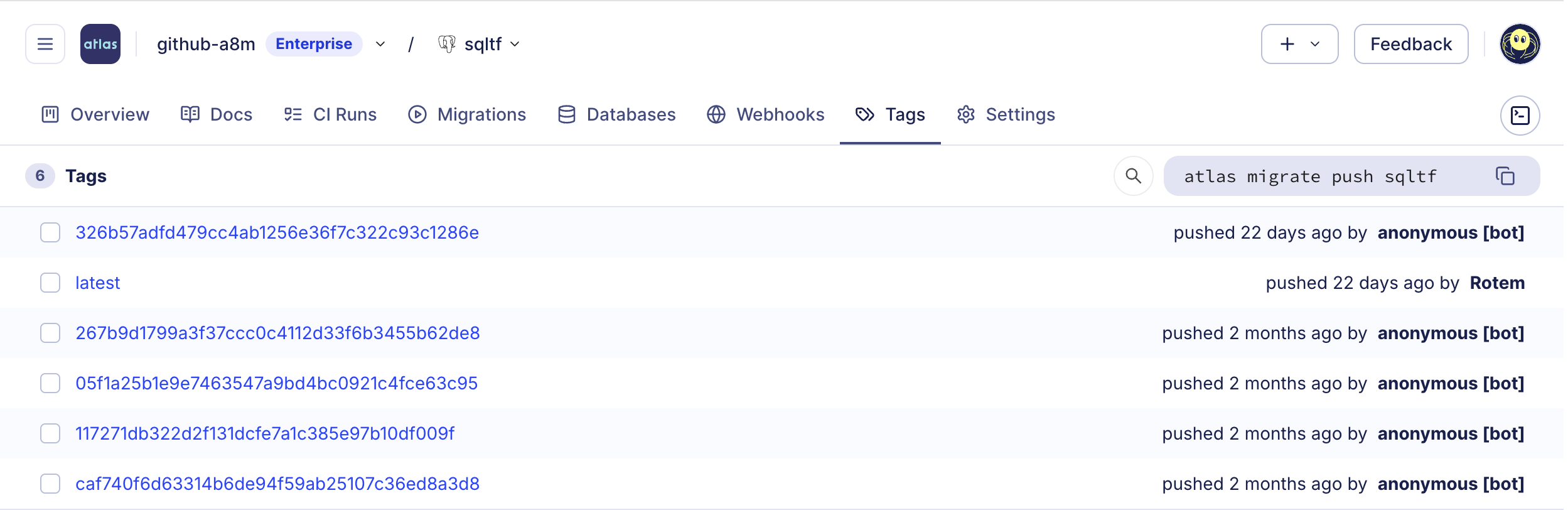

If you set up CI for your project to push the migration directory to Atlas Cloud, you can deploy

a specific migration tag by setting the tag query string in the directory URL. For example:

# Short SHA-1 hash.

--dir "atlas://myapp?tag=267b9d1"

# Full tag.

--dir "atlas://myapp?tag=267b9d1799a3f37ccc0c4112d33f6b3455b62de8"

Visualizing Migration Runs

Schema migrations are an integral part of application deployments, yet the setup might vary between different applications and teams. Some teams may prefer using init-containers, while others run migrations from a structured CD pipeline. There are also those who opt for Helm upgrade hooks or use our Kubernetes operator. The differences also apply to databases. Some applications work with one database, while others manage multiple databases, often seen in multi-tenant applications.

However, across all these scenarios, there's a shared need for a single place to view and track the progress of executed schema migrations. This includes triggering alerts and providing the means to troubleshoot and manage recovery if problems arise.

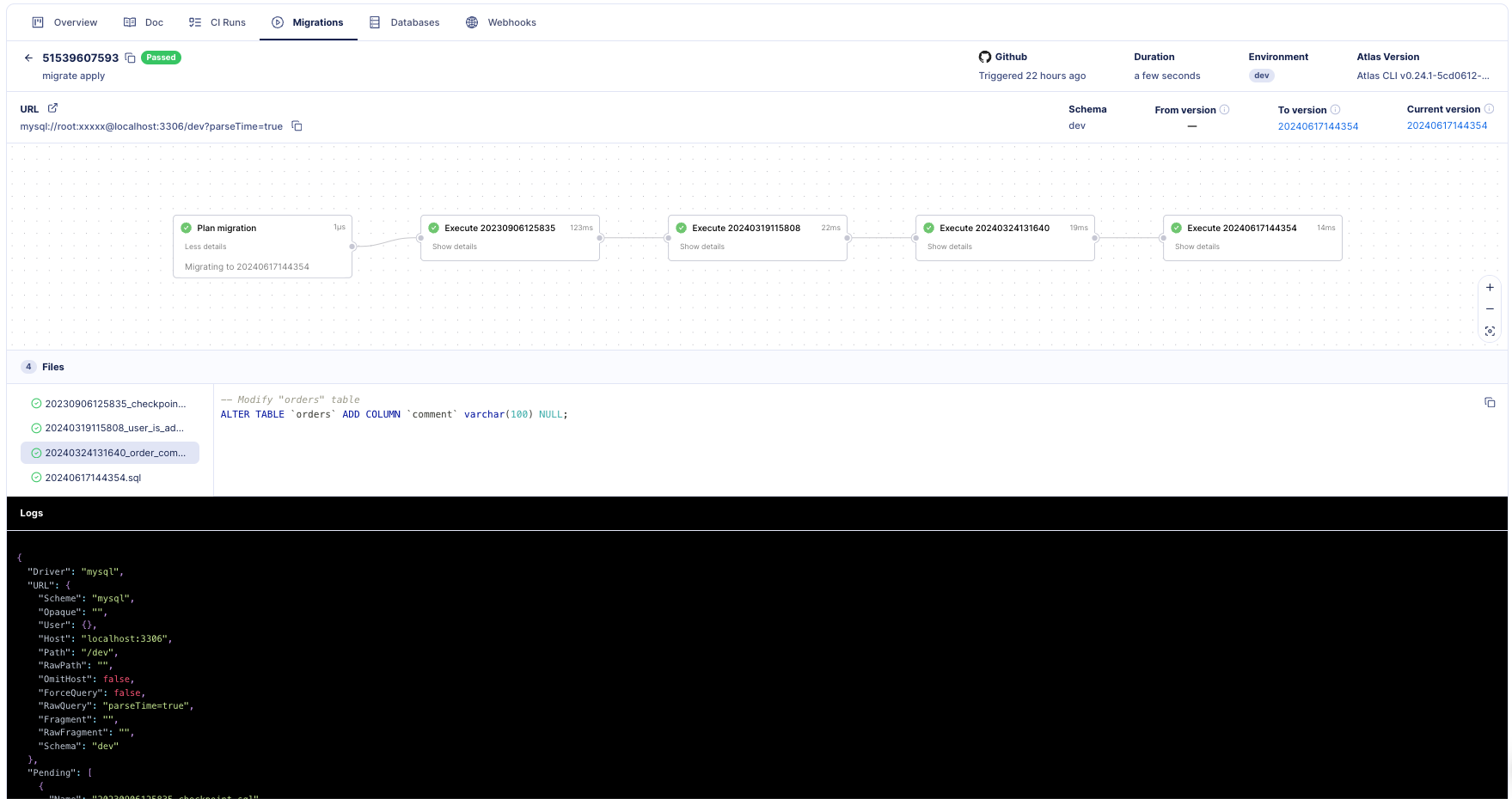

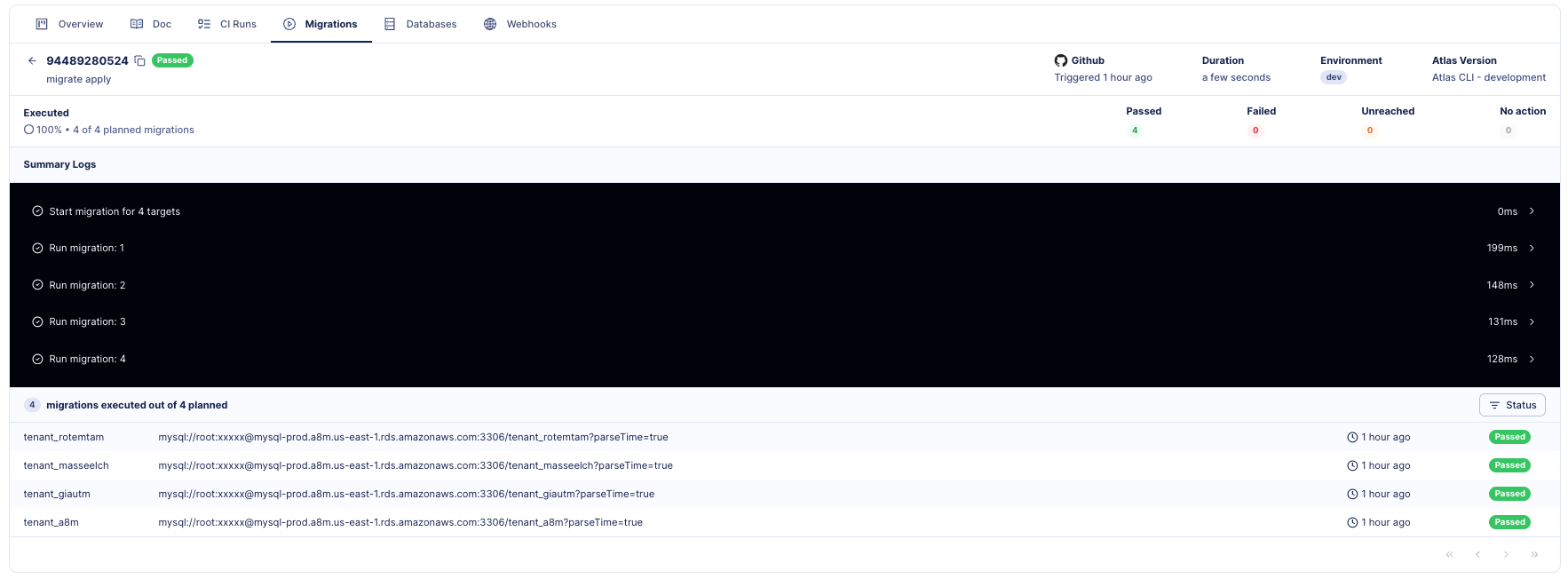

When you use the configuration above with a valid token, Atlas will log migration runs in your cloud account. Here's a demonstration of how it looks in action:

- Single Deployment

- Multi-Tenant Deployment